How Strikethrough took over the world.

We invented a whole library of proofreading marks, then the strikethrough took over them all.

The first Office tools came on tablets in Mesopotamia. For there, in the cradle of civilization, they “patted some clay and put words on it, like a tablet,” as a Sumerian poem related.

Mesopotamians did everything on clay tablets: wrote contracts, calculated wealth, and complained about poor customer service. They made spreadsheets, of a sort, with columns and rows of numbers etched meticulously into clay.

Once dry, words on clay tablets were nearly as good as etched into stone, preserving ledgers and letters for millennia. For those first crucial moments after putting pen to clay, though, tablets were still at least somewhat malleable.

And so humanity’s earliest authors would smooth out their mistakes.

They’d either “attempt to retouch the clay and fill back in the wedges of a mistake”, or would write the correct letter on top of the incorrect one if possible. “It’s not easy to remove a mistaken letter completely from clay,” found University of Illinois professor Wayne Pitard, and traces of those early mistakes and coverups live on in preserved clay tablets.

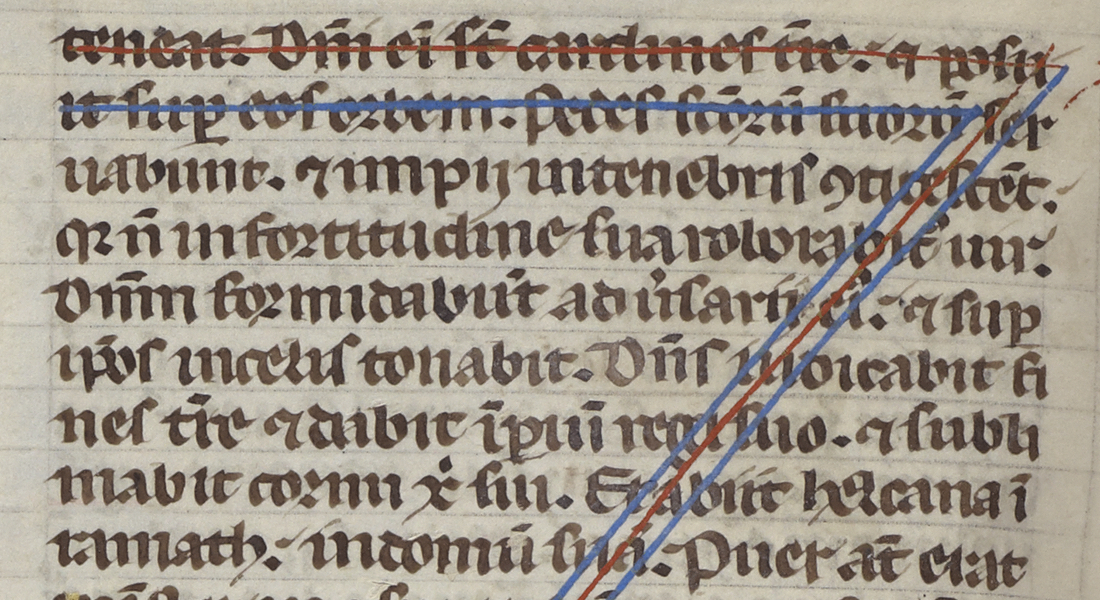

We’d keep trying to find better ways to cover our mistakes, changing language along the way. The eraser was invented a mere 250 years ago; before that, people rubbed mistakes out of parchment with stones or scraped bits of ink-filled paper off with a knife. The wealthy would gild over their mistakes, covering miswritten words with gold leaf. Scribes would draw a line through mistakes, as an early take on the strikethrough, or carefully scrape off mistakes with a knife. Commoners had to choose between snacking on bread, or rubbing letters off paper with it. By the time erasers were invented in 1770 from what was then called gum elastic, being able to rub words off paper felt so monumental we renamed the material itself rubber.

Erasers weren’t enough, though, when the printing press could replicate a mistake hundreds of times before it was discovered.

Automation and the creation of jobs.

“The first products of the printing-press show abundant evidences of the non-existence of any one specially charged with the duty of correcting the compositors' mistakes,” quips the 1911 Encyclopædia Britannica’s entry on proofreading.

There was the bug Bible of 1535, which said to not fear bugs in the night, rather than terrors. Then came the Wicked Bible of 1631, with a commandment that “Thou shalt commit adultery.” For want of a not, the printer lost £300 and their printing license.

Printing errors were so prevalent, over 2% of the words in the first printing of Shakespeare’s First Folio were incorrect.

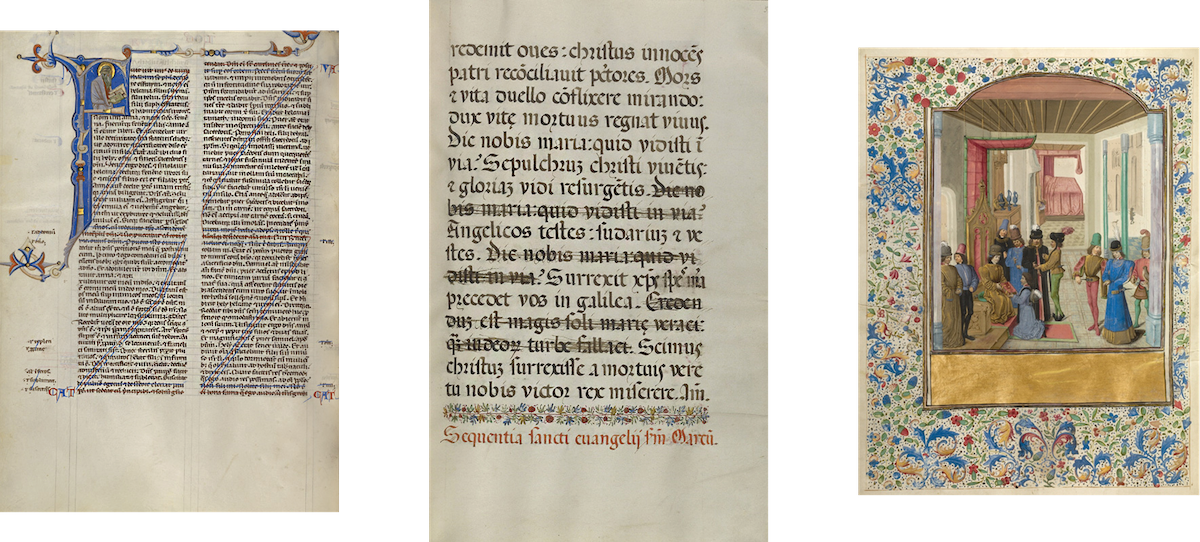

Enter the proofreader. The printed word might have become commoditized, but no one wanted hundreds of copies of misprinted text, let alone to accidentally issue new commandments. And so “it became the glory of the learned to be correctors of the press,” said British author Isaac D'Israeli.

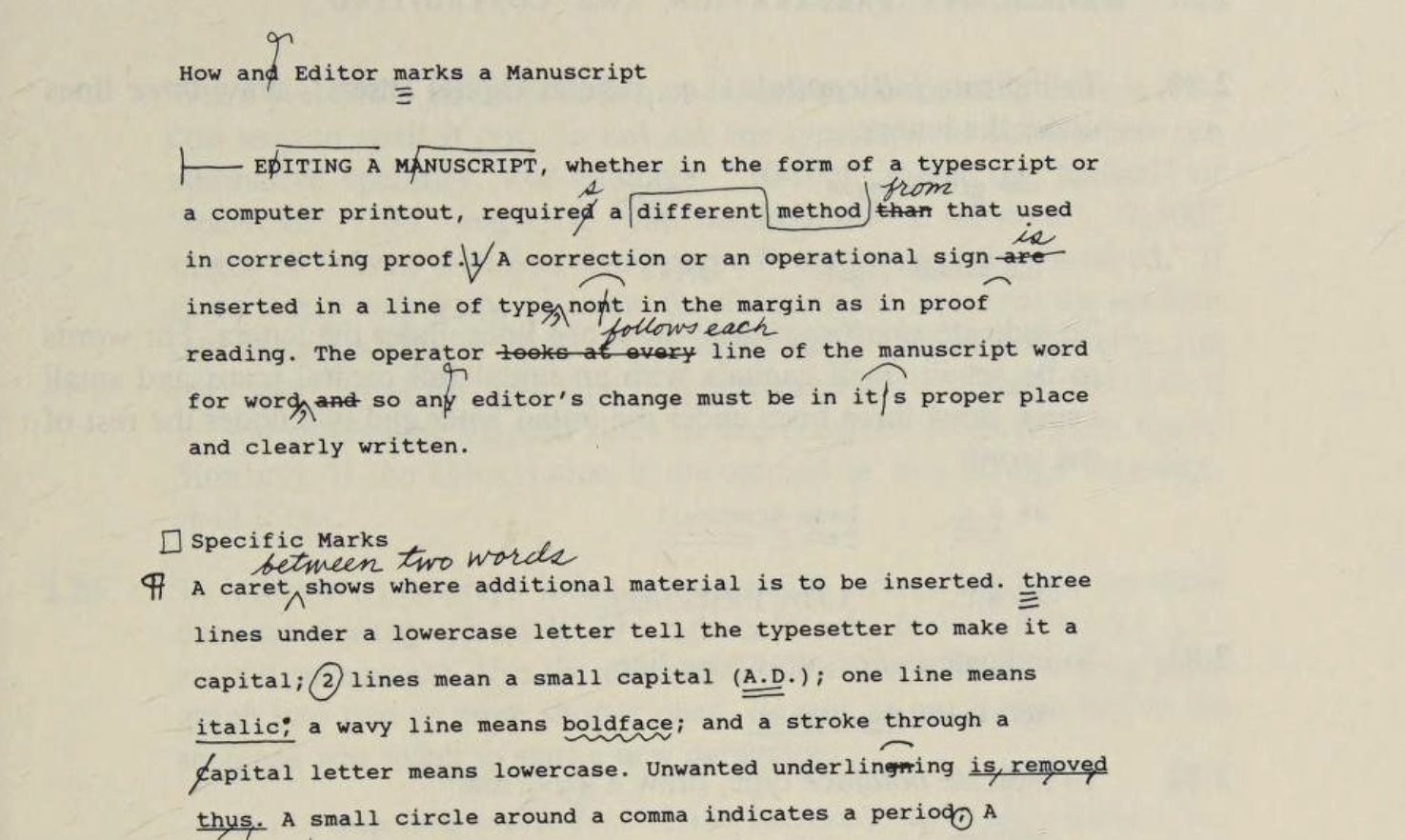

Proofreaders would check every word, dot every i, cross every t. A new markup language of proofreading marks sprung up for proofreaders to tell authors what needed fixing.

History informed the most obvious of marks. A stylized strikethrough was used to delete words, something borrowed from scribes (who, interestingly enough, originally used strikethrough to emphasize text, only later to move the line down and underline emphasized text and use the strikethrough to cross out mistakes). Carets were used to insert text, another editing trick carried over from the middle ages.

There was ;| and :| to insert a semicolon or colon, ][ to center text, and # to add space. Proofreaders would even proofread themselves and add (stet) to let things stand as is and undo extraneous edits.

When the 15th edition of the Chicago Manual of Style was published, 563 after Gutenberg invented his printing press, seven pages of the tome were devoted to proofreading marks. Proofreading had, by then, become a global standard, with ISO 5776 and its thirty-six pages devoted to standardizing “symbols for text proof correction.”

And then there was one.

Only, by then there was only a single proofreading mark that’d survived: The strikethrough.

All it took was a single software update to change the world of proofreading.

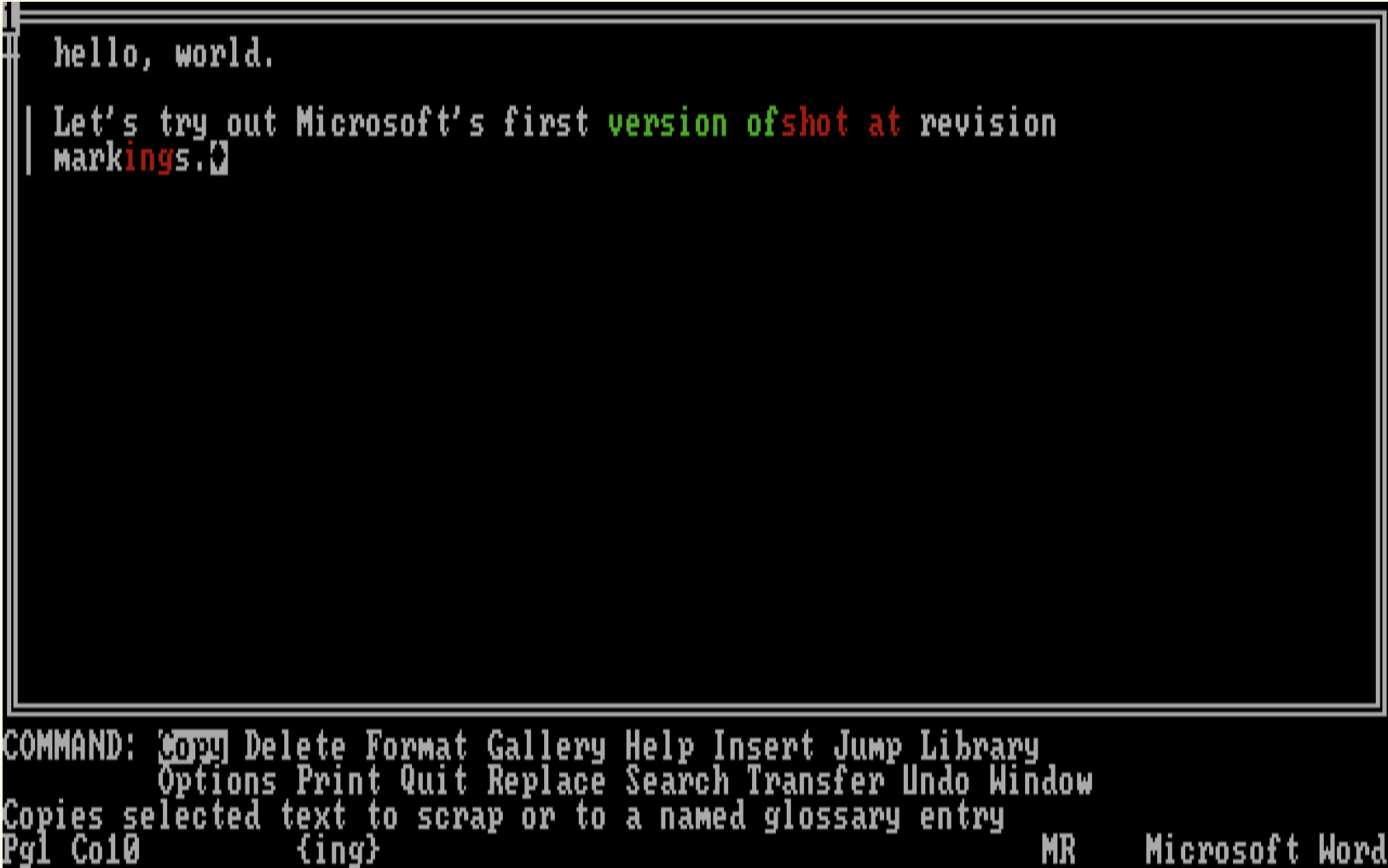

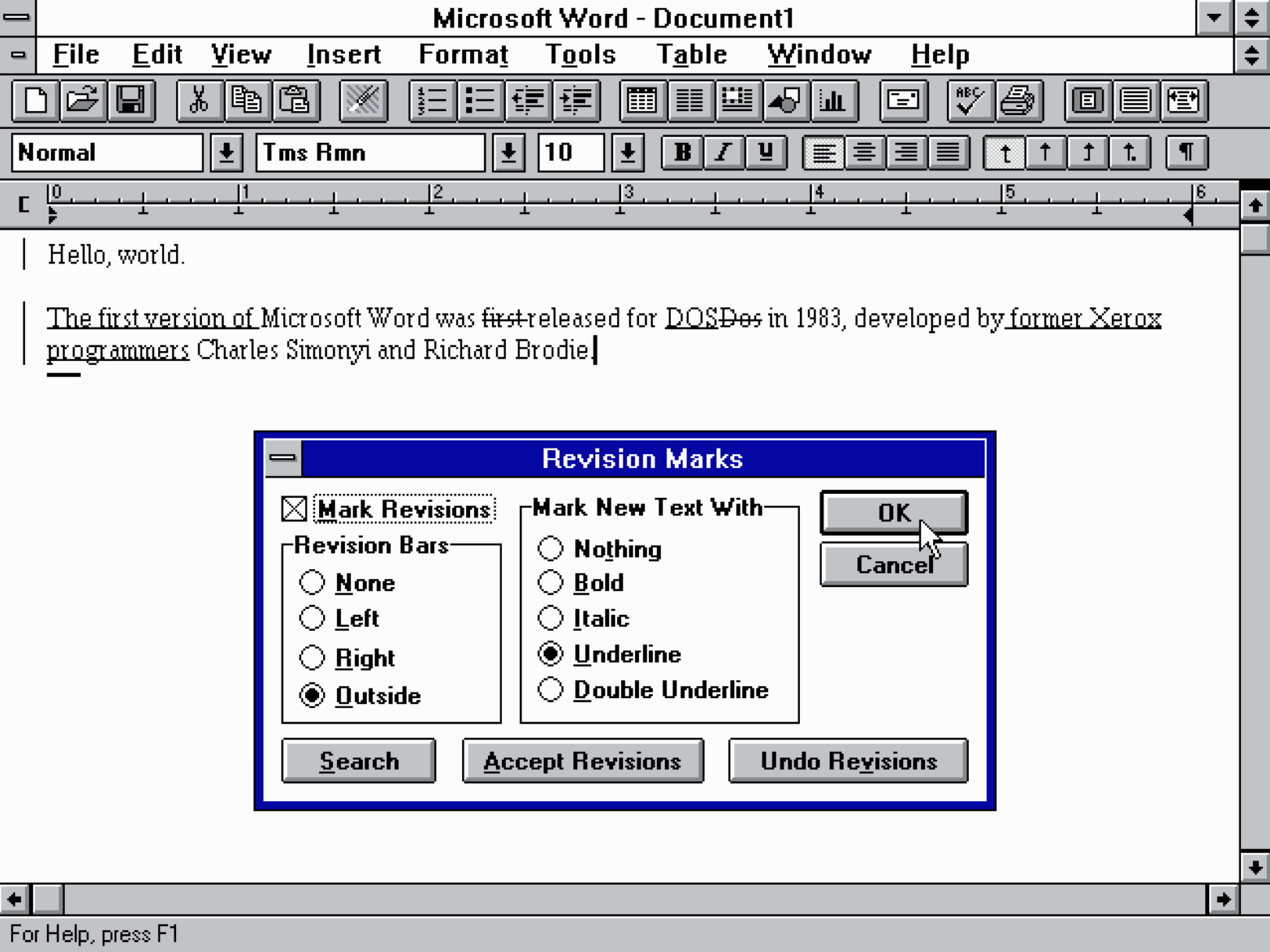

It all started in 1987, with the fourth version of Microsoft Word, the tool that was quickly becoming the default way to write on then-new personal computers. With it came a new tool: Revision Marks. Word could track the letters you deleted and added, by default underlining new text or highlighting it green, and marking deleted text in red.

Then came Windows and a more graphical version of Word. This time, the defaults were changed. No more bright colors; Word now struck-through deleted text and underlined the new. You could change how Word marked new text, making it italic or double-underlined if you wanted. But there was only one way to mark deleted text: Strikethrough.

There was little reason on the PC to add additional proofreading marks. You could simply add a missing space or period or colon, or remove extraneous editing before you saved the document. All proofreading was inserting or deleting something, after all.

The strikethrough stuck, standardized as the last true proofreading mark.

Adobe’s InCopy strikes through deleted text, and highlights new additions. So does Apple’s Pages. When Google Docs brought document editing online, it too kept the strikethrough tradition alive. The latest Chicago Manual of Style devotes merely two pages to proofreading marks; the strikethrough, or what they call a “line through a letter or word,” is one of the few marks that still gets significant focus. Sometimes we don’t even edit the strikethroughs out now; they’ve become an ironic way to include two different turn-of-phrases in the same text, to say no, this is what we really meant.

It all started when a scribe messed up and scratched out lines of text on parchment. Little did they’d just invented the only proofreading mark that’d last.